By doing all these non-linearity measurements, I was thinking to a kind of test to check out the reciprocity. What is going to be reported here is not exactly what is meant by the definition of reciprocity, but it has some relation to it.

What is done is the following :

– the sensor is illuminated with a fixed DC-powered LED light source,

– at a camera gain setting equal to 1 (minimum value), the exposure time is adjusted such that the output value is about 75 % of saturation. Under the conditions present, the exposure time turned out to be 42.24 ms,

– the output signal of the sensor as well as its offset value were measured by means of calculating the average values over a 50 x 50 window and using 100 images,

– next the gain of the camera is set to 4 (maximum value), the exposure time is reduced by a factor of 4 to 10.56 ms,

– the output signal of the sensor as well as its offset value were measured again over the same window and again using 100 images,

– the above sequence of switching between minimum gain and maximum gain (with adjusted exposure time) was repeated 10 times.

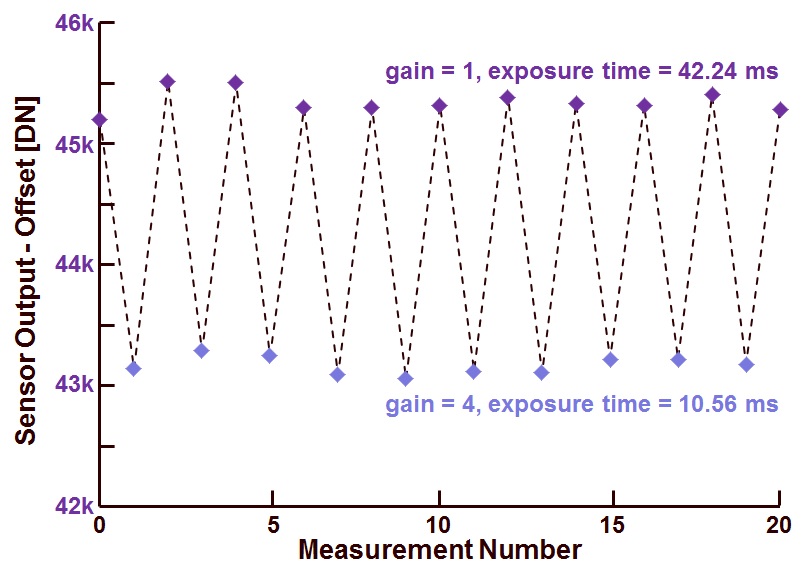

The result of this measurement is shown in Figure 1.

Figure 1 : Sensor output value (corrected for the offset) as a function of measurement number, for each measurement the camera gain and exposure time are matched to each other.

On the horizontal axis the measurement number is shown, on the vertical axis the corrected sensor output is shown. A couple of observations can be made :

– the sensor output values obtained at low gain, high exposure time are not equal to each other. Despite of the large amount of data that is averaged, still quite a bit of noise is present,

– the sensor output values obtained at high gain, low exposure time are neither equal to each other,

– in principle a change in gain would perfectly be compensated by an inverse change of the exposure time, but neither this can be seen in the measurements. From other measurement it could be learned as well that the ratio of sensor output does not exactly matches the ratio of the camera gain settings. So if a gain = 1 corresponds indeed to a gain of 1, the gain = 4 setting does not exactly equals to a gain factor of 4, but comes much closer to 3.81.

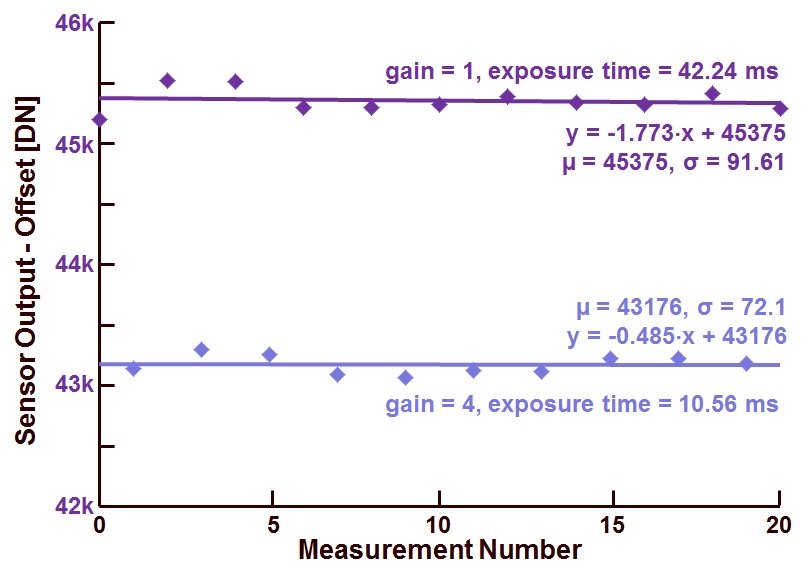

The same data as present in figure 1 is repeated in figure 2, but now the regression line is calculated for the two sets of data (low gain and high gain).

Figure 2 : Same data as present in figure 1, but now with the regression lines added.

An more-than-interesting remark can be made now that the regression lines are added : there seems to be a pattern present between the deviation of the measured data and the regression line. Any idea where this effect is coming from ? You get a bottle of good French wine for the correct explanation !

Albert, 20-08-2013.