By doing all these non-linearity measurements, I was thinking to a kind of test to check out the reciprocity. What is going to be reported here is not exactly what is meant by the definition of reciprocity, but it has some relation to it.

What is done is the following :

– the sensor is illuminated with a fixed DC-powered LED light source,

– at a camera gain setting equal to 1 (minimum value), the exposure time is adjusted such that the output value is about 75 % of saturation. Under the conditions present, the exposure time turned out to be 42.24 ms,

– the output signal of the sensor as well as its offset value were measured by means of calculating the average values over a 50 x 50 window and using 100 images,

– next the gain of the camera is set to 4 (maximum value), the exposure time is reduced by a factor of 4 to 10.56 ms,

– the output signal of the sensor as well as its offset value were measured again over the same window and again using 100 images,

– the above sequence of switching between minimum gain and maximum gain (with adjusted exposure time) was repeated 10 times.

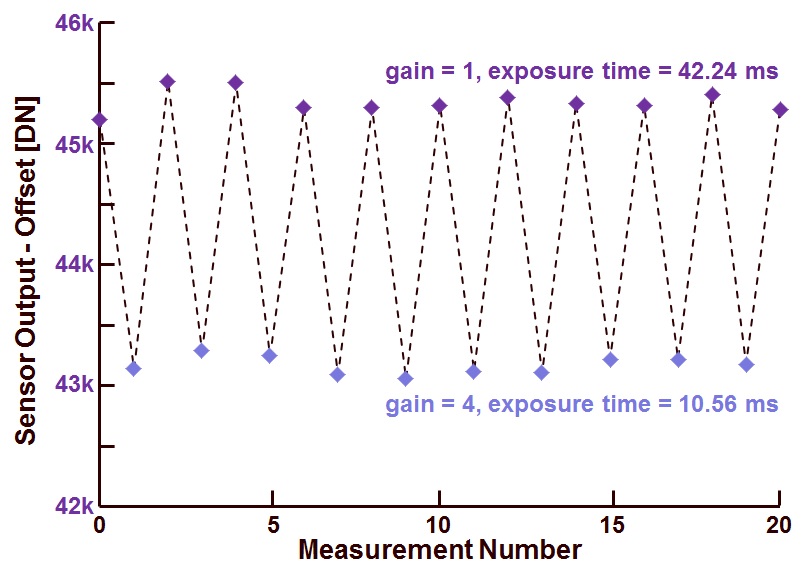

The result of this measurement is shown in Figure 1.

Figure 1 : Sensor output value (corrected for the offset) as a function of measurement number, for each measurement the camera gain and exposure time are matched to each other.

On the horizontal axis the measurement number is shown, on the vertical axis the corrected sensor output is shown. A couple of observations can be made :

– the sensor output values obtained at low gain, high exposure time are not equal to each other. Despite of the large amount of data that is averaged, still quite a bit of noise is present,

– the sensor output values obtained at high gain, low exposure time are neither equal to each other,

– in principle a change in gain would perfectly be compensated by an inverse change of the exposure time, but neither this can be seen in the measurements. From other measurement it could be learned as well that the ratio of sensor output does not exactly matches the ratio of the camera gain settings. So if a gain = 1 corresponds indeed to a gain of 1, the gain = 4 setting does not exactly equals to a gain factor of 4, but comes much closer to 3.81.

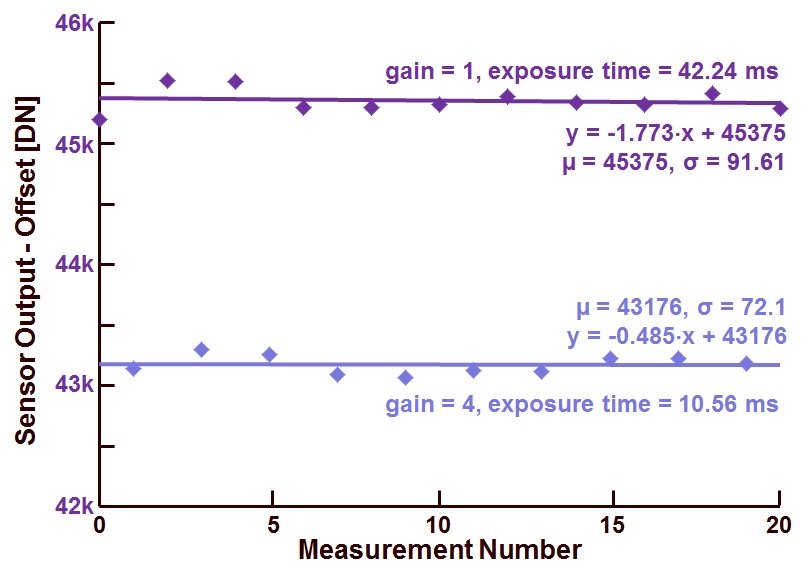

The same data as present in figure 1 is repeated in figure 2, but now the regression line is calculated for the two sets of data (low gain and high gain).

Figure 2 : Same data as present in figure 1, but now with the regression lines added.

An more-than-interesting remark can be made now that the regression lines are added : there seems to be a pattern present between the deviation of the measured data and the regression line. Any idea where this effect is coming from ? You get a bottle of good French wine for the correct explanation !

Albert, 20-08-2013.

Let me throw a couple of wild guesses:

-temperature if x-axis is order of magnitude of minutes/hours, which is probably not the case…

-variations on the actual flux of the source (AC variation on the DC bias) if x-axis is order of magnitude of seconds.

With regards to the gain not being 4, do you have any info on the circuitry used? My guess is low gain of the amplifier being used. E.g. for a switched-cap amp with capacitor ratio setting the gain, the open loop gain of the actual amplifier will lower the real gain of the circuit. In that case the gain of 1 would also be in reality lower than 1. If this is indeed the type of amplifier, you can calculate the actual gains and the open loop gain of the amplifier

I have another guess.

The patterns shown in Figure 2 have a same tendency between the deviation of the data and the regression line. I think, the variation shown in this measuement plot might indicate the DNL of the imager.

Gain change of 3.81 – if the camera is a CCD, then it can be variable gain amplifier effect, non linearity of it settings, or proximity to saturation area.

It can be also effect of incomplete photo-diode reset before exposure.

Pattern – in addition to David’s comments, fluctuations of conversion gain, e.g. due to drift of internal camera power supplies or similar.

The sensor is a CMOS device. Albert.

Horizontal axis is in the range of minuten, taking 100 images takes about 5 secondes.

The small fluctuations could be because you move during the experiment causing change in stray light.

The other could be that the LED is in reality pulsend.

As for the gain: don’t believe the settings given of the cmos imager

Drift of the LED light source as David also wrote. Maybe LED temperature drift. Can you plot (shot) noise vs. image # and do you see then the same trend?

1. Check the gain stages within the sensor. Are they analog or digital?

If the gain stage is within the ADC (i.e. changing VREF) then you could have an issue in the tuning or configuration of the device.

2. Identify if a digital processing block is turned on such as lens vignetting correction or any type of defect correction.

The experiment tells that the amount of illumination changed.

You moved during the experiment and changed the amount of straylight.

The led is pulsed. The outputpower of the led not constant etc.

As for the gain, dont believe the doc’s

Does the sensor has a single channel CFA? Bayer CFA?

If this is a multi-color CFA, and you measure a single channel, then this could be a result of optical/electrical cross-talk which affect before the analog gain.

As David wrote, fluctuation in the light source. This can be due to temperature variations in the LEDs. Can you shot plot noise vs. measurement # and does this show the same pattern? Or measure light intensity over time with a power meter.

Hi Albert

my spontaneous thoughts are:

1) direct optical incident into the sense node or at least some leakage to the sense node, which generates a larger signal for larger integration times

2) 1/f noise shows up as the slow fluctuations

Another suggestion of my colleague Michael:

image lag, which depends on the amount of electrons inside the PPD, and thus, generates the constant offset as well

Regards from Zurich!

Beni

Sorry Albert, my comment on the leakage current to the sense node does not make sense of course. What I had in mind was a constant injection of some leakage charge (for example diffusing from the bulk). But that would mean a larger signal for larger gain of course. So I withdraw this comment. 🙂

Let’s say the average level before offset is close to saturation but the noise distribution is not clipped or slightly clipped to the right at gain 1, the clipped noise distribution ihas then practically a 0 mean. Now at gain 4, while the average level should be the same, the noise distribution is significantly clipped to the right, thus the clipped distribution has a negative mean, hence what we observe.

It is the right answer?

Non-ideal matching between the sampling and feedback capacitor in the PGA?

How are you sure that the gain 4 = gain 1*4?

When the cameras we build state gain 1, its just a number in the system, a digital representation of the lowest amount of gain added to the image. Gain 4 /= Gain 1 *4.

remember, gain is just an analog multiplication of electrical charge, its not a finite number implicitly whole.

My initial guess for the two gain settings not being the same was non-linearity in the analog signal chain, meaning even before the variable gain amplifier (I assume the gain amplifier has a close to perfect gain): Pixel output is not linear with

1) its charge on the sense node (junction cap -> voltage dependent)

2) source-follower (back-gate effect) and

3) select switch (output voltage dependent resistance).

But these effects should all result in an inverse effect: with higher signals, the gain becomes smaller.

What I can imagine just from the data you gave us, is non-perfect charge transfer in the PPD-pixel (it is a PPD, isn’t it?). If a small amount of charge is not transferred, this will have more effect on the smaller signal than on the one with Tint=40ms. Is the ratio the same for other integration time pairs?

About the variation of the results: They show similar curves on both gain settings those must come from a global effect:

– Temperature/voltage drift on the illumination

– Temperature/voltage drift on the sensor, sensor head

– Background light: is the setup complete shielded against it? Was it a partially clouded day?

best regards

Stray light is not possible, the measurements are done in a light-tight box, so all stray light from external sources are shielded.

LED is not pulsed, but is connected to a DC power supply.

Gain (whether correct or not) is corrected, the question is after the low frequency drift of the output signals.

Gain stage : the gain is applied in the analog domain. Gainstage is NOT within the ADC.

Lens vignetting is OFF, and the results shown are coming from a 50 x 50 ROI in the middle of the sensor, so most probably without significant vignetting.

It is a monochrome device, no CFA at all.

Gain difference are corrected in the software, it is the low frequency shift I am looking after.

Following my last guess, I notice that the slop of the regression line for x4 gain is close to 1/4 of it for x1 gain, which indicates the source might locate at the backend of the amplifier, so the nonlinearity of the ADC?

This is my explanation: The sensor will draw more power during read-out than exposure. Therefor the average power usage of the two cases is different as the sensor will draw less (average) power when the exposure is longer, resulting in a lower heating of the sensor. You are constantly switching between these two power states and therefor two temperatures, causing the oscillation to occur and then decrease with time as the sensor finds an equilibrium between the two temperature stages. This oscillation in temperature probably then ripples through to the image via dark current, causing the LF oscillation to occur in the average image level.

If you repeat the experiment and first do 10 measurements with 10ms and then ten with 40ms, I think this oscillation is not present.

Why are you monkeying with the integration times? Why not just adjust the on-time of the LED (electronically or with a shutter).

I have seen something like this before, when trying to calibrate the sensor with a constant light source, and just varying the integration time. It is great in theory, but real life issues get in the way.

My guess is that you are using an integrate then read type sensor. I forgot exactly what the issue that I was seeing, but I remember it had something to do with the reset mode of the sensor.

With a constant pixel clock/frame rate, the reset time was not constant between the two integration times. With a flat image (or maybe it was a dark subtracted image), you could clearly see a discontinuity in the image that was related to the length of the integration. At a constant frame rate, when the integration time was small, the artifact was at the top of the image, and as it increased, the artifact would move down towards the bottom of the image frame.

I think that the artifact could be calibrated out, but only at the same integration time. (You would need to vary the light source to get multiple levels.)

The area of pixels that you are using is probably on one side, and then the other of the time related artifact.

What brand sensor is this? (So we know what not to buy in the future 🙂 )

The oscillations are probably related so temperature, maybe you could do some of the experiments mentioned above (like all short exposures then all long exposures).

Did you check the gain stability vs temperature? Or does the software gain correction has a look up table for temperature?

Why are you monkeying with the integration time rather than just gating (physically or electronically) the LED?

I had seen something similar to this on a CMOS ROIC. The issue was how the reset circuitry works. I would guess that your sensor has a constant frame/pixel rate, and that you were just adjusting the integration time (i.e. integration time is a multiple of the line rate or some such).

I ran into a situation where we were as we varied the integration time, we saw an artifact that moved from the ‘top’ to ‘bottom’ of the image frame. I believe that this artifact would normally calibrate out, but it was visible in some conditions. It was many moons ago, I don’t remember if the artifact impacted gain or offset.

I am guessing is that this type of artifact moved from one side of the measured pixel region to the other, and so it impacted the raw measured data.

Are flat frames available of both ‘dark’ and ‘light’ at both integration times?

I’ll take a stab

1) Each sample here is a difference between average signal under led illumination minus average under dark for same int time but these two values are derived from two different datasets. So temperature change of DN could cause the fluctuation. But too hard to tell without a signal vs variance plot at various temperatures.

2) A power supply fluctuation would cause the analog voltage levels to fluctuate but since this is average of 100 images that would probably average out but sometimes there are low frequency drifts in power supply.

So temperature or power supply variation are my best bets.