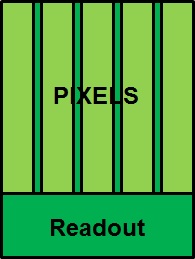

The stitching story started in the previous blog is not yet complete. Further explanation deals with one-dimensional versus two-dimensional stitching, and single reticle versus multiple reticle stitching.

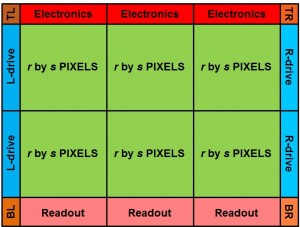

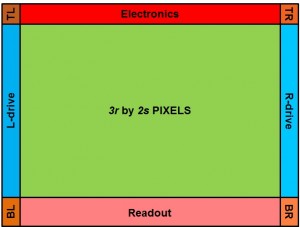

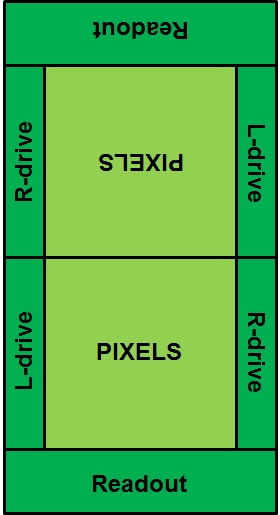

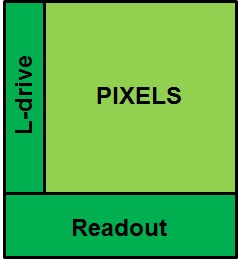

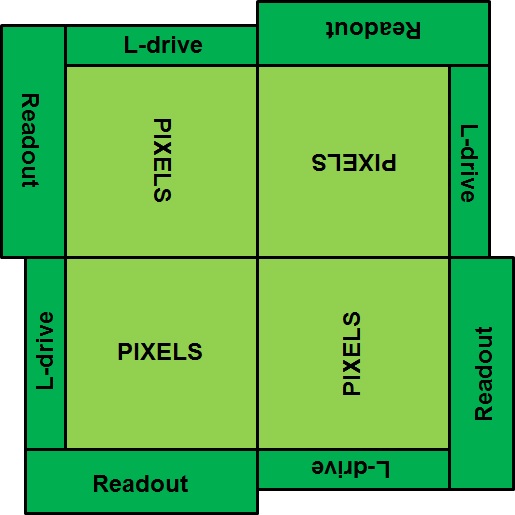

The difference between one-dimensional and two-dimensional stitching is straight forward. If the stitching is done in one direction (vertical OR horizontal), it is known as one-dimensional stitching, if the stitching is done in two directions (vertical AND horizontal), it is known as two-dimensional stitching. It should be clear that two-dimensional stitching gives the designer much more freedom in his/her design task, and does allow any device size to be designed. Most of today’s lithographic equipment is capable of handling two-dimensional stitching, but in the earlier days, some type of alignment machines did allow only one-dimensional stitching. For image sensors, the very first one-dimensional stitching was done by E2V, while the very first two-dimensional stitching was realized by Philips Research Labs.

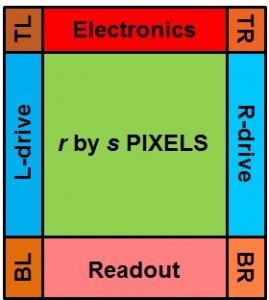

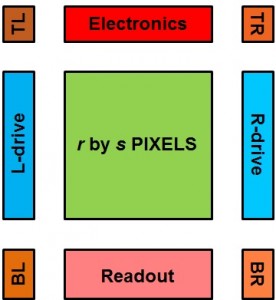

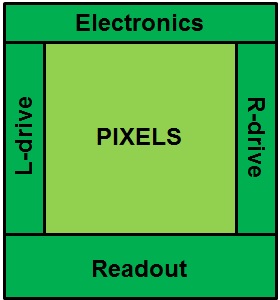

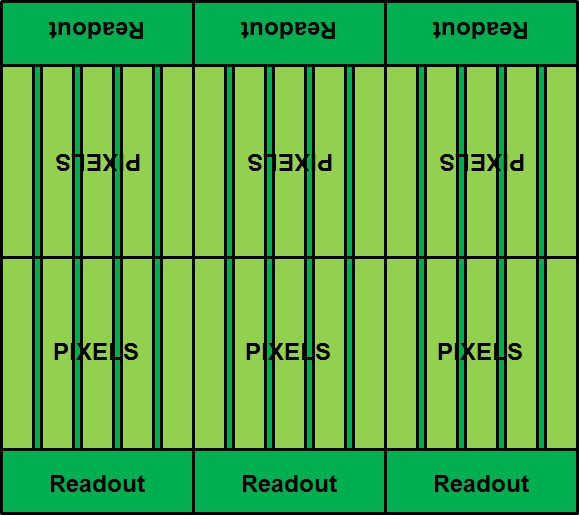

Another important discussion is the restriction to single reticle stitching or the option for multiple reticle stitching. If the field of view of the lithographic machine is limited, it should be clear that (to limit the amount of stitchlines in the active imaging area) the full reticle size should be devoted to an array of pixels. Consequently all peripheral parts and blocks need to be put on a second reticle. This design strategy is known as multiple reticle stitching. Unfortunately most fabs are not so happy with multiple reticle stitching because the reticles have to be exchanged during the exposure of the wafers. This is time consuming, puts a burden on the use of the equipment and is costing a lot of extra money. For that reason most fabs (if they offer stitching at all) prefer single reticle stitching. Another important factor to avoid multiple reticle stitching is the cost of the extra mask set. For more advanced CMOS processes, the mask cost is not negligible anymore compared to the cost of the wafer processing. It was Philips Research Labs that fabricated for the first time large area imagers based on multiple reticle stitching.

As a consequence, single reticle stitching is much more common than multiple reticle stitching. In that case, the active imaging array together with the peripheral blocks need to fit on a single reticle size, which leaves a smaller area for the pixel array (compared to a full reticle size in the case of multiple reticle stitching). Resulting in more exposures for the active area, more stitch lines, more processing time and more expensive processing. On the other hand, one needs only one mask set.

Altogether in the case of large-area imagers, very often a designer likes to go for multiple reticle, two-dimensional stitching (to avoid too many stitch lines), while most fabs prefer to avoid stitching at all. It is not always an easy exercise to find an optimum between these two extremes. There are only a very limited number of fabs/foundries in the world that do allow their customers to go for two-dimensional, multiple reticle stitching. If stitching is offered at all, then the most common option is single reticle stitching in combination with one-dimensional stitching.

Albert, 09-08-2016.